Decision Making Endnotes

About these endnotes

This is where we provide references and in-depth information about everything in the Decision Making Playbook.

Acknowledgements

This playbook draws on decades of research by many women and men who dedicated their lives to the behavioral sciences so that we may make better decisions and live better lives. Thank you. Any errors or omissions are ours.

Why does decision making matter?

Decision making encompasses choices large and small, from which career should you pursue to where to buy a sandwich. Optimal decision making requires considering every relevant feature of our options, but we do not always know everything or have time to fully research every decision. Often, situations require that we make decisions with limited knowledge or time or mental resources. We then rely on heuristics—methods that reduce the complexity of a decision and the effort it requires (Shah & Oppenheimer, 2008). In most cases, these mental shortcuts are appropriate and help us make a good decision more efficiently than we would if we considered every relevant piece of information (Czerlinski, Gigerenzer, & Goldstein, 1999). Using price as a heuristic for quality, determining which of two products is higher in quality by seeing which is more expensive is a good strategy most of the time (De Langhe, Fernbach, & Lichtenstein, 2016).

But because heuristics lead us to make decisions based on only part of the whole picture, they create biases—errors that are systematic. Our shortcut to use price as a heuristic for quality, for example, leads us to believe the same pain reliever is more effective when it’s sold at full price than at a discount (Waber, Shiv, Carmon, & Ariely, 2008). Another example is our tendency to judge the riskiness of a thing by how much it scares us, which is why we are more afraid of snakes than of taking showers, which kills more people each year (Finucane, Alhakami, Slovic, & Johnson, 2000).

We are most likely to rely on heuristics inappropriately when we are constrained in our ability to fully think through a decision (Kahneman, 2003). In short, we are boundedly rational. We seek to make decisions rationally, but bias influences our decision making when we have too little time, energy, or information to fully consider all aspects of a decision (Simon, 1990).

While we can clearly see bias in others’ decisions, we are less able to recognize it in our own (Scopelliti, Morewedge, McCormick, Min, Lebrecht, & Kassam, 2015). This bias blind spot stems from using different information to evaluate the decisions made by others versus by ourselves. We tend to judge others on the decisions they make, such as by the people they pick for their teams and organizations. We tend to judge ourselves by thinking about whether our own decision processes seemed biased to us, a more forgiving standard (Pronin & Kugler, 2008).

How do I encourage good decision making in others?

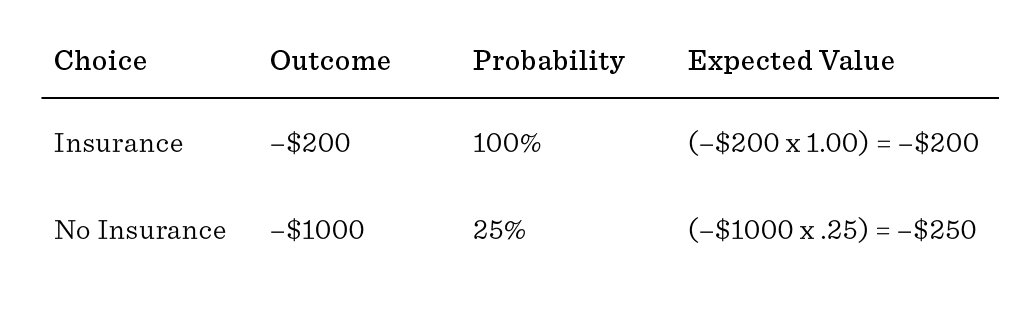

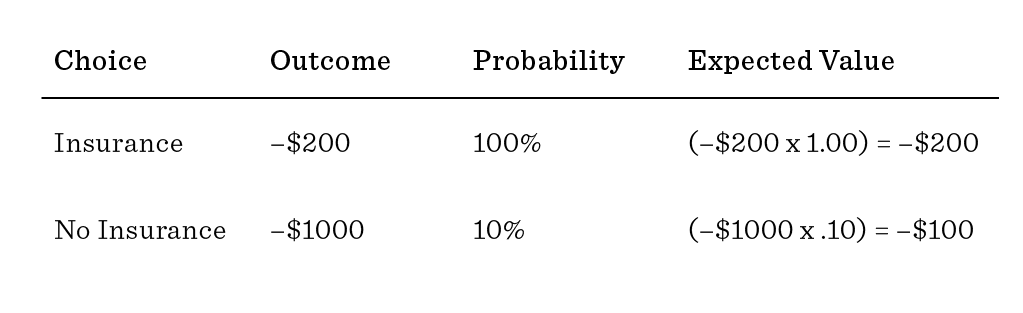

Expected value. A good way to decide which is the best option is to think about it as a choice between gambles (Duke, 2019). Each option has an expected value. It can have good and bad possible outcomes (with more or less utility), and those outcomes could be certain or uncertain (with larger or smaller probability). If you spend $200 to buy insurance for your $1000 phone, for example, you’ve decided to lose $200 for sure rather than take a risk to have to buy a replacement at full price. The expected value of this option is -$200 (-$200 x 1.0) Whether buying insurance is the best decision, then, depends on if the odds of you losing or breaking your phone are more, equal to, or less than 20%. If you have a 25% chance of needing a new phone, the expected value of not buying insurance is -$250, so buying insurance is the better choice. If you have a 10% chance of needing a new phone, then the expected value of not buying insurance is -$100, so not buying insurance is the better choice—even if you do end up breaking your phone.

When insuring your phone is the better decision:

When insuring your phone is the worse decision:

Avoid Confirmation Bias

Most of the time, we don’t feel indifferent to all of our options. We might hope one option is better, or the framing of a question makes us believe it will be best supported by the evidence. Either can induce confirmation bias, which is a tendency to search for, interpret, and evaluate evidence in a way that supports our initial hunch or prediction (Nickerson, 1998). We are more likely to ask people questions, for example, that confirm our existing beliefs about their personality (Snyder & Swann, 1978). Or to question a criminal defendant in a way that would prove them guilty rather than innocent (Kassin, Goldstein, & Savitsky, 2003). Confirmation bias has even changed the course of history: It has been identified as a contributor to the United States’ decision to invade Iraq (Silberman et al., 2009).

References

Czerlinski, J., Gigerenzer, G., & Goldstein, D. G. (1999). How good are simple heuristics? In G. Gigerenzer, P. M. Todd, & The ABC Research Group, Evolution and cognition. Simple heuristics that make us smart (pp. 97-118). Oxford University Press.

De Langhe, B., Fernbach, P. M., & Lichtenstein, D. R. (2016). Navigating by the stars: Investigating the actual and perceived validity of online user ratings. Journal of Consumer Research, 42(6), 817-833.

Duke, A. (2019). Thinking in bets: Making smarter decisions when you don’t have all the facts. Portfolio.

Finucane, M. L., Alhakami, A., Slovic, P., & Johnson, S. M. (2000). The affect heuristic in judgments of risks and benefits. Journal of Behavioral Decision Making, 13(1), 1-17.

Kahneman, D. (2003). A perspective on judgment and choice: Mapping bounded rationality. American Psychologist, 58(9), 697–720.

Kassin, S. M., Goldstein, C. C., & Savitsky, K. (2003). Behavioral confirmation in the interrogation room: On the dangers of presuming guilt. Law and human behavior, 27(2), 187-203.

Nickerson, R. S. (1998). Confirmation bias: A ubiquitous phenomenon in many guises. Review of General Psychology, 2, 175-220.

Morewedge, C. K., Yoon, H., Scopelliti, I., Symborski, C. W., Korris, J. H., & Kassam, K. S. (2015). Debiasing decisions: Improved decision making with a single training intervention. Policy Insights from the Behavioral and Brain Sciences, 2(1), 129-140.

Pronin, E., & Kugler, M. B. (2007). Valuing thoughts, ignoring behavior: The introspection illusion as a source of the bias blind spot. Journal of Experimental Social Psychology, 43(4), 565-578.

Scopelliti, I., Morewedge, C. K., McCormick, E., Min, H. L., Lebrecht, S., & Kassam, K. S. (2015). Bias blind spot: Structure, measurement, and consequences. Management Science, 61(10), 2468-2486.

Sellier, A. L., Scopelliti, I., & Morewedge, C. K. (2019). Debiasing training improves decision making in the field. Psychological Science, 30(9), 1371-1379.

Shah, A. K., & Oppenheimer, D. M. (2008). Heuristics made easy: An effort-reduction framework. Psychological Bulletin, 134(2), 207-222.

Silberman, L. H., Robb, C. S., Levin, R. C., McCain, J., Rowan, H. S., Slocombe, W. B., . . . & Cutler, L. (2005, March 31). Report to the President of the United States. Commission on Intelligence Capabilities of the United States Regarding Weapons of Mass Destruction.

Snyder, M., & Swann Jr, W. B. (1978). Behavioral confirmation in social interaction: From social perception to social reality. Journal of Experimental Social Psychology, 14(2), 148-162.

Simon, H. A. (1990). Bounded rationality. In J. Eatwell, M. Milgate, & P. Newman (Eds.), Utility and probability (pp. 15-18). Palgrave Macmillan, London. https://doi.org/10.1007/978-1-349-20568-4_5

Waber, R. L., Shiv, B., Carmon, Z., & Ariely, D. (2008). Commercial features of placebo and therapeutic. JAMA, 299(9), 1016-7.